Structured Query Language (SQL) is a key tool for working with data in relational databases. As more companies deal with large and messy data, they need easier ways to search and understand it. One growing solution is using natural language – the way we speak – to ask data questions.

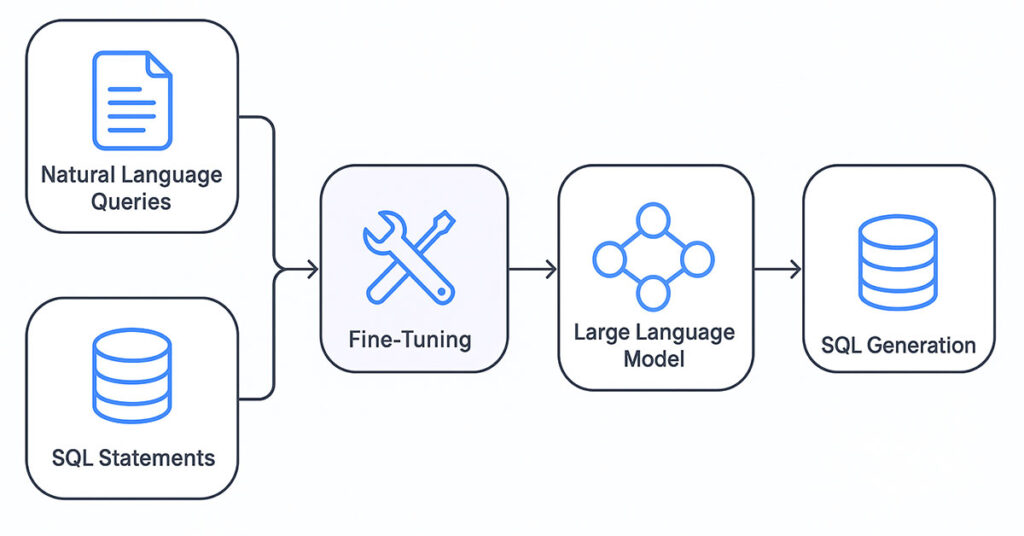

Large Language Models (LLMs), like GPT, T5, and BERT, help make this possible. These models can turn plain English questions into SQL code. But to work well in real-world settings, they often need special training for the specific industry or type of data.

This blog explains how to fine-tune LLMs for accurate SQL generation. We will look at the methods used, the common challenges, and the best tips to get better results.

Jump to:

The Need for SQL Generation via LLMs

Overview of Large Language Model Architectures

Dataset Preparation for Text-to-SQL Training

Fine-Tuning Strategies for Accurate SQL Output

Evaluation Metrics for Text-to-SQL Performance

Challenges in Generating SQL from Natural Language

Best Practices to Improve SQL Generation Accuracy

Tools and Frameworks for LLM Fine-Tuning

The Need for SQL Generation via LLMs

Traditional database queries can be hard to write. They often need technical skills and a good understanding of the database structure. However, Large Language Models (LLMs) help solve this problem. They turn everyday language into SQL, the language used by databases.

As a result, people without coding skills can now access data on their own. This opens new possibilities across many fields. For example, in business intelligence, users can quickly ask questions and get insights. In customer support, teams can find answers faster. Even in healthcare and finance, natural language queries can make work more efficient.

Overview of Large Language Model Architectures

Several LLMs have been explored for text-to-SQL generation, each with unique architectural strengths:

T5 (Text-To-Text Transfer Transformer):

T5 turns every NLP task into a text-to-text problem. This approach gives it a lot of flexibility. Its strong base model and easy fine-tuning make it a great choice for turning natural language into structured SQL.

GPT (Generative Pre-trained Transformer):

GPT works well for generating content. When you fine-tune it with SQL examples or guide it with good prompts, it creates clear and accurate SQL queries based on natural language input.

BART:

BART combines the best parts of BERT and GPT. It uses a two-part system—a smart reader (encoder) and a smart writer (decoder). This setup helps it turn questions into well-formed SQL statements with high accuracy.

Codex (OpenAI Codex):

Codex has been trained on both computer code and plain language. Because of this, it understands logic, syntax, and structure. It can create SQL queries that work well across different data types and domains.

FLAN-T5 (Fine-tuned LLM with Instructions):

FLAN-T5 is trained to follow instructions. It does especially well with few-shot or zero-shot examples. That means it can give accurate SQL results even when there’s very little training data.

Dataset Preparation for Text-to-SQL Training

The quality of the dataset plays a pivotal role in the effectiveness of fine-tuning LLMs for SQL generation. A well-structured dataset ensures the model can generalize across various queries and schemas while producing accurate outputs.

Key Components of a Text-to-SQL Dataset

Natural Language Questions: These are user-posed queries, often written in informal or conversational tone. They form the input that the model will learn to convert into SQL.

SQL Equivalents: Each natural language question must be paired with a correct and executable SQL query. These act as target outputs for supervised learning.

Database Schema Metadata: Includes details such as table names, column names, data types, and relationships between tables. This information helps the model understand the structure and constraints of the database.

Contextual Metadata (optional): Additional information like user intent, query context in multi-turn settings, or domain-specific keywords can enhance the training quality.

Popular Public Datasets

Spider: A complex, cross-domain dataset with diverse SQL queries and rich database schemas. It challenges models to generalize to unseen databases.

WikiSQL: Based on semi-structured Wikipedia tables, it includes simpler SQL queries. Ideal for initial experimentation and benchmarking.

CoSQL: A conversational version of Spider, focused on multi-turn interactions and contextual understanding.

SParC: Like CoSQL, but with more structured turns and transitions, helping train models on dialogue-based SQL tasks.

Custom Dataset Creation Guidelines

Data Collection: Gather domain-specific queries from logs, support chats, or surveys.

Annotation: Have SQL experts manually write corresponding queries or use semi-automated tools to assist.

Normalization: Ensure consistent formatting of both natural language and SQL to reduce variability during training.

Schema Injection: Format inputs to include schema details inline or as separate input components.

Fine-Tuning Strategies for Accurate SQL Output

Fine-tuning a pre-trained Large Language Model for SQL generation involves adapting it to a domain-specific dataset and optimizing it to produce syntactically valid and semantically correct SQL queries. Below are the key strategies and best practices to enhance SQL generation accuracy.

Supervised Fine-Tuning: Train the model using pairs of natural language questions and their corresponding SQL queries. This method helps the model directly learn the input-output mapping. Ensuring high-quality, diverse training data is essential for success.

Schema-Conditioned Generation: Incorporate database schema into the input prompt or model embeddings. Providing schema context helps the model understand the data structure and constraints, enabling it to generate queries that are executable and relevant.

Prompt Engineering: Design prompts that clearly guide the model’s behavior. In zero-shot or few-shot scenarios, include examples in the prompt to show the model how to format the output. This is particularly effective for models like GPT-3.5/4.

Few-Shot and In-Context Learning: For large models that are not fine-tuned but used through APIs, few-shot learning can be applied. Supply a few well-chosen example pairs within the prompt to help the model infer the task.

Curriculum Learning: Start training with simpler SQL queries and gradually introduce more complex ones. This approach helps the model build foundational SQL generation skills before tackling nested or multi-table queries.

Data Augmentation: Expand the training dataset using paraphrased queries, synthetically generated SQL, or slight modifications of existing examples. Augmentation increases robustness and reduces overfitting.

Loss Function Customization: Use customized loss functions or additional penalties for structural errors in SQL. Token-level or component-based losses can help the model learn the importance of SQL syntax elements.

Evaluation Metrics for Text-to-SQL Performance

Evaluating the performance of a fine-tuned LLM for SQL generation is crucial to assess its accuracy, reliability, and real-world applicability. Various metrics have been developed to capture different dimensions of model performance – from syntactic correctness to execution fidelity.

Exact Match Accuracy: Measures the percentage of generated SQL queries that match the reference SQL exactly, token by token. It is a strict metric that reflects syntactic precision but does not account for equivalent yet differently structured queries.

Execution Accuracy (ExecAcc): Evaluates whether the generated SQL produces the same output as the reference SQL when executed on a database. It reflects real-world performance and is more tolerant of structural differences in equivalent queries.

Component Matching: Breaks down SQL queries into components such as SELECT, WHERE, GROUP BY, ORDER BY, etc., and evaluates each part independently. This helps identify which sections of SQL the model handles well or struggles with.

BLEU Score (Bilingual Evaluation Understudy): Measures n-gram overlap between generated and reference queries. Originally used for machine translation, BLEU offers a linguistic similarity score but may overlook semantic and structural correctness.

SQL Structure Match (SQLMatch): Compares the Abstract Syntax Tree (AST) or query plan structure of the generated SQL with the reference. It is useful for understanding if the underlying logic of the query is correct, even if the surface form differs.

Human Evaluation: Involves expert reviewers assessing the quality, correctness, and relevance of the generated SQL. It is resource-intensive but offers valuable insights into usability and edge-case handling.

Query Complexity Metrics: Metrics that categorize and score queries based on their complexity (e.g., number of joins, subqueries, or nesting levels) to evaluate model robustness across simple to advanced tasks.

Schema-Aware Evaluation: Considers the validity of generated SQL against the actual database schema. It helps verify if the model produces executable and meaningful queries with respect to real data structures.

Challenges in Generating SQL from Natural Language

Even with recent progress, generating correct SQL from natural language remains difficult. Several key challenges slow down performance and accuracy:

Ambiguity in Natural Language

Natural language is often vague. Users may ask unclear or incomplete questions. As a result, the model struggles to understand the exact intent without more details or constraints.

Schema Alignment Issues

The model must connect words in the question to the correct tables and columns in the database. When this mapping is wrong, the output SQL becomes invalid or misses key data.

Complex Query Structures

Advanced queries involve joins, subqueries, and groupings. These require deep logic and multi-step reasoning. Many models fail to handle this complexity with high accuracy.

Limited Context Awareness

In real-world use, users often ask follow-up questions. The model must remember previous interactions and schema details. However, many LLMs still struggle to manage context over multiple turns.

Troubleshooting and Evaluation

Assessing SQL generated by language models involves more than syntax validation. The query must also be functionally correct and produce the desired output. Identifying the cause of errors frequently requires manual inspection.

Best Practices to Improve SQL Generation Accuracy

Improving SQL generation accuracy from natural language involves a combination of thoughtful training, prompt design, and validation techniques. Here are five best practices that offer the most impact:

Use Domain-Specific, High-Quality Datasets: Fine-tune the model on datasets that reflect the specific domain and schema structures it will encounter in production. High-quality examples help reduce misinterpretations and hallucinations.

Embed Schema Context in Input: Ensure that table names, column names, and relationships are provided explicitly in the model input. This enables the model to generate SQL queries aligned with the correct schema.

Apply Prompt Engineering Techniques: Design prompts with clear instructions and examples. Use few-shot prompting to demonstrate the expected format, improving performance in low-resource settings or with generalized models.

Validate Outputs Programmatically: Use automated tools to check the generated SQL for syntax errors, schema mismatches, or execution failures. Filtering or correcting outputs post-generation enhances reliability.

Incorporate User Feedback Loops: Allow users to review and refine generated SQL. Capture these edits to retrain or fine-tune the model iteratively, improving accuracy based on real-world usage patterns.

Tools and Frameworks for LLM Fine-Tuning

Fine-tuning Large Language Models for SQL generation requires robust and flexible tools. Below are five leading frameworks that simplify and accelerate this process:

Hugging Face Transformers: An industry-standard library offering a vast collection of pre-trained models and utilities. It supports seamless fine-tuning of models like T5, BERT, GPT-2, and more through intuitive APIs.

TensorFlow: A widely adopted deep learning framework that allows flexible model architecture customization, scalable training, and integration with tools like Keras for efficient fine-tuning pipelines.

Torchtune: A native PyTorch library that simplifies the fine-tuning of large models. It offers modular configurations and supports training on both consumer-grade and multi-GPU environments.

Label Studio: An open-source data labeling platform that enables manual and automated annotation. It helps build high-quality, structured datasets tailored for LLM training workflows.

Databricks Lakehouse: A unified data and AI platform that combines storage, processing, and ML tooling. It simplifies distributed training and model deployment within collaborative data environments.

Case Study: Fine-Tuning T5 for SQL Generation

An enterprise sought to enable its customer support team to independently query internal data systems without requiring SQL expertise. To achieve this, a fine-tuned T5 model was deployed as part of their business intelligence solution.

Objective: Create an intuitive natural language interface that could translate user queries into accurate SQL commands while aligning with internal database schema and security constraints.

Approach:

- A domain-specific dataset of 10,000 question-SQL pairs was curated, covering a variety of intents and query types.

- Each input was schema-augmented, including table names and relevant fields to enhance contextual understanding.

- The model was trained using the t5-base architecture with task-specific formatting (e.g., “Translate: [Question] | Schema: [Details]”).

- Evaluation focused on two key metrics: exact match accuracy (syntactic fidelity) and execution accuracy (semantic correctness).

Outcomes:

- Achieved 85% exact match accuracy, indicating strong alignment with ground truth SQL.

- Reached 90% execution accuracy, confirming correct data retrieval on live databases.

- Significantly reduced reliance on technical teams for everyday query generation.

- Empowered customer support agents to derive insights rapidly through a user-friendly interface integrated into the enterprise BI dashboard.

Conclusion

Fine-tuning large language models for SQL generation is a promising area that bridges natural language understanding and structured data querying. By carefully preparing datasets, selecting appropriate model architectures, and applying best practices, organizations can deploy powerful natural language interfaces to their data platforms. As models continue to evolve, the future holds immense potential for even more accurate, efficient, and intuitive data interaction systems.

To explore these capabilities firsthand, try a free trial of EzInsights AI – a powerful AI platform that helps you turn natural language into actionable data insights.

FAQs

What is the purpose of fine-tuning LLMs for SQL generation?

Fine-tuning helps adapt a general-purpose language model to understand domain-specific data and SQL structure. This improves the model’s ability to convert natural language questions into accurate, executable SQL queries.

Do I need a large dataset to fine-tune an LLM for SQL tasks?

Not necessarily. While large datasets improve performance, high-quality and well-annotated smaller datasets—especially when combined with techniques like few-shot learning or prompt tuning—can still yield strong results.

Which models are best suited for text-to-SQL tasks?

Popular choices include T5, GPT-3.5/4, Codex, BART, and FLAN-T5. Each has unique strengths; for example, Codex excels with code generation, while T5 and FLAN-T5 are strong in instruction-based tasks.

What are common challenges in using LLMs for SQL generation?

Key challenges include understanding ambiguous user queries, mapping terms to the correct schema elements, generating complex SQL structures, and maintaining conversational context in multi-turn queries.

Abhishek Sharma

Website Developer and SEO Specialist

Abhishek Sharma is a skilled Website Developer, UI Developer, and SEO Specialist, proficient in managing, designing, and developing websites. He excels in creating visually appealing, user-friendly interfaces while optimizing websites for superior search engine performance and online visibility.