In today’s world, large language models (LLMs) like GPT are changing the way businesses use data, interact with customers, and streamline processes. These models offer great potential, but to unlock their full power, grounding is essential. Grounding involves connecting AI responses to reliable data, specific contexts, or domain knowledge. This ensures that the output is accurate, relevant, and trustworthy.

By grounding LLMs, businesses can align AI responses with user expectations and real-world needs. Grounded models reduce errors and provide actionable insights for tasks like customer support, data analysis, and decision making. This improves the quality of interactions and builds trust in AI systems.

To get the most out of LLMs, focus on grounding them effectively. This practice helps industries maximize the potential of AI while ensuring trustworthy results.

What is LLM Grounding?

LLM Grounding connects a large language model (LLM) like GPT to external sources such as real-world data or knowledge. This helps ensure the model’s output is relevant and accurate. It also allows the model to understand specific fields and interact with different types of data, such as real-time information or knowledge graphs.

Grounding boosts model performance by:

- Ensuring accuracy: LLMs can now refer to live data or specific sources, instead of relying only on training data.

- Focusing on specific domains: It helps LLMs specialize in areas like medical, legal, or business by integrating domain knowledge.

- Clarifying unclear terms: It helps remove confusion by connecting ambiguous words to real-world contexts.

Why LLM Grounding is Important

LLMs need grounding because they are reasoning engines, not data repositories. While LLMs understand language, logic, and text manipulation, they lack deep knowledge of specific domains or context. They also rely on outdated information. LLMs are trained on fixed datasets, and updating them is complex, costly, and time-consuming.

LLMs are trained using publicly available data, so they miss out on crucial information behind corporate firewalls, or in datasets from fields like finance, healthcare, retail, and telecommunications. Without grounding, LLMs cannot access this valuable data.

Grounding helps an LLM connect better with the real world. It acts like a bridge, enabling the model to understand words’ true meaning, navigate language nuances, and link its knowledge with real-world situations. This also reduces errors, or “hallucinations,” that can occur when the model lacks context.

Here are some reasons why grounding matters:

Improve accuracy: LLMs can access live data and trusted sources, ensuring their responses are correct and relevant.

Enhances specialization: Grounding helps models focus on specific fields like medicine, law, or business by incorporating expert knowledge.

Clarifies confusion: It helps resolve unclear or ambiguous terms by linking them to real-world contexts, making the model’s output easier to understand.

How Does LLM Grounding Work?

LLM grounding connects large language models (LLMs) to external data sources. This gives the model access to real-time and accurate information, beyond what it learned during training. Here’s how grounding works:

- Integration with external data: The model can use live databases, APIs, or knowledge graphs to pull in updated information.

- Contextual understanding: Grounding helps the model understand specific words or phrases by linking them to real-world data. This improves its interpretation of meaning.

- Domain-specific knowledge: Grounding allows the model to specialize in fields like healthcare or finance. This ensures it can answer questions accurately within those areas.

- Reducing errors: Grounding helps minimize errors or “hallucinations.” The model can check its answers against trusted data, making the output more reliable.

LLM Grounding Technique with RAG

Retrieval-Augmented Generation (RAG) is an advanced technique that improves LLM grounding by integrating external data as the model generates responses. This allows the model to pull in the most relevant, real-time information from a large database, ensuring that the answers it provides are both accurate and up to date.

With RAG, LLMs can handle more complex questions across different areas, as they have access to the latest information available. This makes their responses more reliable. However, implementing RAG comes with its challenges, such as ensuring that data is retrieved efficiently and that the information used is both relevant and accurate.

Despite these challenges, RAG holds great promise for improving LLM performance. It is particularly valuable in situations that require the model to access large, constantly updated knowledge sources in real time. By enhancing the grounding process, RAG contributes to better results, especially in applications like creating data products based on specific entities.

Top LLM Grounding Challenges

While LLM grounding enhances model performance, it comes with several challenges. Here are some of the top hurdles:

- Data Relevance: Ensuring that the data retrieved and used for grounding is accurate and relevant is a key challenge. Irrelevant or outdated data can lead to incorrect responses.

- Efficient Data Retrieval: Grounding requires quick and effective access to large datasets. Building systems that can retrieve data efficiently, especially in real-time, is complex.

- Contextual Understanding: LLMs may struggle with understanding how to apply the external data correctly in different contexts. Without the proper connection to the query, even accurate data can lead to errors.

- Data Privacy and Security: When using external data, ensuring that it comes from secure and trusted sources is essential. Protecting sensitive data during the retrieval process is another challenge.

- Computational Costs: Managing large datasets and integrating them with LLMs can be resource-intensive, requiring significant computational power and memory, which can drive up costs.

- Keeping Data Updated: Since LLMs rely on external sources for accurate and current information, ensuring that the data stays up to date can be difficult, especially when new information is constantly emerging.

Implementing grounding in Large Language Models (LLMs)

Implementing grounding in Large Language Models (LLMs) involves linking their responses to reliable data, domain-specific knowledge, or contextual information. Here’s a structured approach to effectively ground LLMs:

Identifying the Source of Grounding Information

The first step in grounding Large Language Models (LLMs) is identifying the right data sources. Reliable and structured data, like customer records, product databases, or financial reports, are essential. These sources ensure the AI outputs accurate information. AI Analytics can accelerate your workflow by incorporating domain-specific knowledge, such as medical guidelines, legal regulations, or technical manuals, helping the AI give precise responses. Grounding can also involve using contextual data, like purchase history or recent conversations, to tailor the model’s answers to the current situation.

Incorporating External APIs and Data Integrations

Integrating external APIs and data sources helps ground LLMs effectively. By linking the model to real-time data through APIs, the AI can access the latest news, weather, or stock market information. This keeps the model’s responses up-to-date and relevant. Businesses can also integrate custom databases or FAQs to provide specific information. This method improves the model’s ability to offer current, industry-relevant insights, enhancing the quality and reliability of its outputs.

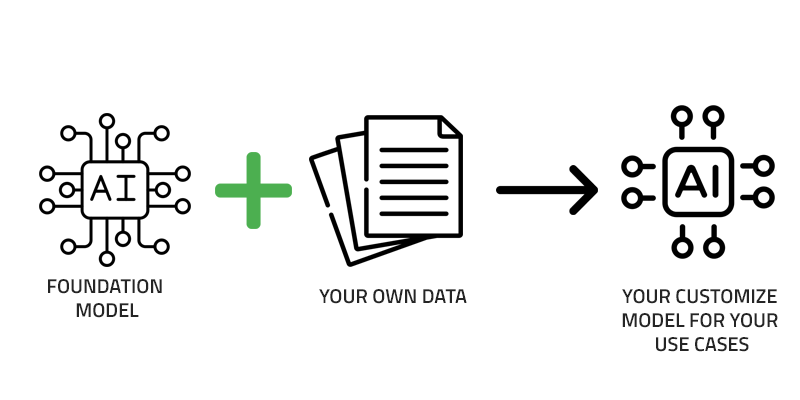

Fine-Tuning with Domain-Specific Training

Fine-tuning the LLM with domain-specific data is crucial. By training the model on datasets containing industry-specific terms and processes, it becomes more proficient in handling specialized queries. Fine-tuning helps the model understand the unique needs of different fields, such as healthcare or finance. Adding contextual cues during training allows the model to adjust its responses based on user intent and previous interactions.

Implementing Verification Mechanisms

Verification mechanisms ensure the accuracy of grounded responses. One approach is to cross-check the model’s output with trusted data sources. This reduces the risk of errors or outdated information. In fields like healthcare or finance, where precision is vital, a Human-in-the-Loop (HITL) approach allows experts to validate AI responses. This combination of automation and manual verification strengthens the trustworthiness of the system.

Setting Boundaries for Model Responses

Grounding also involves defining the scope of the model’s knowledge. This ensures the AI provides relevant answers and avoids topics outside its expertise. For example, a finance-focused model shouldn’t offer medical advice. Setting these boundaries keeps the AI’s responses accurate and focused. Transparency is also key. The AI should be able to explain how it arrived at its answers, so users understand its reasoning.

Monitoring and Adjusting Over Time

Grounding is an ongoing process that requires continuous updates. As new information becomes available, the model’s data sources should be refreshed. Regular updates help the model stay relevant and accurate. Collecting user feedback is crucial for improving the grounding process. Listening to users helps refine the model’s ability to deliver actionable, context-aware insights.

Tools and Technologies for Grounding

Businesses can use various tools to ground LLMs effectively. Retrieval-Augmented Generation (RAG) combines generative models with external retrieval systems. This allows the model to pull in real-time data for more relevant responses. Knowledge graphs help the model understand relationships between entities, improving accuracy. Embedding models also aid grounding by allowing the model to query similar, relevant information based on user input.

Conclusion

In conclusion, as AI continues to evolve, grounding LLMs is a transformative strategy that empowers enterprises to harness AI’s full potential. By infusing language models with deep, industry-specific knowledge, LLM grounding enhances the accuracy and relevance of AI-driven solutions across business operations such as IT, HR, and procurement. This process not only overcomes the limitations of base models but also enables faster, more precise decision-making.

Adopting LLM grounding is crucial for businesses looking to innovate and improve efficiency in an increasingly competitive landscape. As AI and human expertise converge, organizations will unlock new levels of advancement.

Experience the power of grounded AI today—book a demo with EzInsights AI and see how our solutions can elevate your business.

FAQs

What is LLM Grounding?

LLM grounding refers to the process of linking a language model’s responses to external data, context, or specific knowledge to ensure accuracy and relevance. It helps to avoid hallucinations (incorrect or fabricated responses) and ensures that the model’s outputs align with real-world facts or the context it’s working within.

How do I ground a large language model in my specific domain?

To ground an LLM in a specific domain, you can provide it with domain-specific training data, such as technical documents, manuals, or knowledge bases. Additionally, integrating APIs or external databases during interactions can help provide up-to-date, accurate information that the model can reference when generating responses.

What are the benefits of grounding an LLM?

Grounding an LLM can significantly improve the quality of its responses by providing more context, reducing errors, and increasing the model’s relevance to specific tasks. This leads to more trustworthy, accurate, and contextual aware outputs, making it particularly valuable for specialized industries like healthcare, finance, or legal services.

How can I implement real-time grounding in an LLM for dynamic environments?

To implement real-time grounding, you can integrate the LLM with live data sources such as databases, APIs, or real-time information streams. This ensures that the model can fetch the most current data and adapt its responses based on real-time information, enhancing the relevance and accuracy of its outputs.

Abhishek Sharma

Website Developer and SEO Specialist

Abhishek Sharma is a skilled Website Developer, UI Developer, and SEO Specialist, proficient in managing, designing, and developing websites. He excels in creating visually appealing, user-friendly interfaces while optimizing websites for superior search engine performance and online visibility.