Neural networks are a key technology in machine learning and AI. They work like the human brain, helping computers process data and recognize patterns. This allows machines to make smart decisions based on connections in the information.

This article explores different types of neural networks, including convolutional, recurrent, feedback, and deep neural networks. Each type has unique features and applications, making them useful for various AI tasks.

Understanding Neural Network

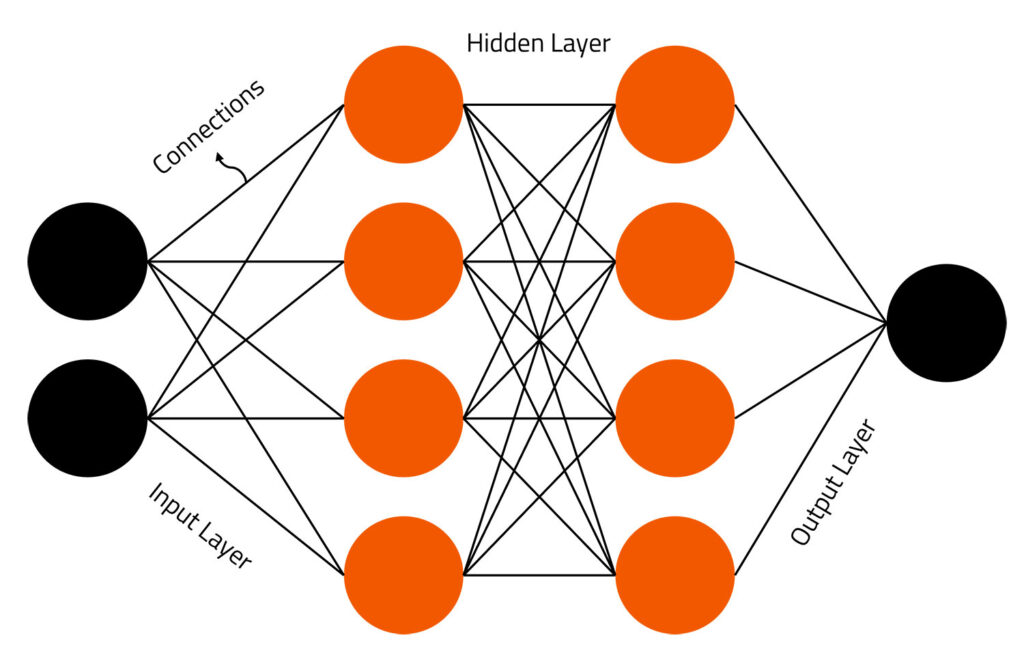

A neural network is a set of algorithms that mimic how the human brain works. It helps identify patterns in data. These networks consist of artificial neurons, also called nodes, arranged in three layers: input, hidden, and output.

Each connection between nodes has a weight that changes during training. This allows the network to learn and improve accuracy over time. Neural networks excel in tasks like image recognition, language processing, and predictive modeling. They can adapt to new inputs without major changes.

Types of Neural Networks

There are different kinds of deep neural networks – and each has advantages and disadvantages, depending upon the use. Examples include:

Feedforward Neural Network (FNN): The simplest type where data moves in one direction from input to output.

Convolutional Neural Network (CNN): Specialized for image and video processing, using filters to detect patterns.

Recurrent Neural Network (RNN): Used for sequential data like time series and natural language processing, incorporating memory to retain past information.

Long Short-Term Memory (LSTM): A type of RNN that effectively handles long-term dependencies.

Generative Adversarial Network (GAN): Generative Adversarial Network consists of two networks (generator and discriminator) that work against each other to generate realistic data.

Transformer Networks: Used in NLP tasks, such as OpenAI’s GPT models, to process large amounts of text efficiently.

Radial Basis Function Network (RBFN): Used in function approximation and classification tasks.

Self-Organizing Map (SOM): Utilized for clustering and visualization of high-dimensional data.

Autoencoder: Employed for unsupervised learning, particularly in feature reduction and anomaly detection.

Spiking Neural Networks (SNN): Mimics biological neural networks for energy-efficient processing.

Basic Architecture of a Neural Network

Neural networks mimic the human brain, processing data through layers of interconnected nodes (neurons) to identify patterns and make predictions. Here’s how they work:

Input Layer

The input layer collects raw data, such as images, text, or numbers. It transfers this data to the next layer, ensuring the network processes and extracts useful information efficiently.

Hidden Layers

Hidden layers perform complex calculations using weighted connections and activation functions. They extract key patterns and features, helping the network understand relationships within the data and improve prediction accuracy over time.

Weights & Biases

Weights define the strength of connections between neurons, while biases adjust outputs. By fine-tuning these values during training, the network enhances learning, adapts to patterns, and produces better predictions.

Activation Functions

Activation functions determine whether neurons activate. They introduce non-linearity, allowing the network to learn complex patterns. Examples include ReLU for efficiency, Sigmoid for probabilities, and Softmax for multi-class classification tasks.

Output Layer

The output layer generates final predictions, such as image classifications, stock forecasts, or text outputs. It translates processed information from hidden layers into meaningful results, making decisions based on learned patterns.

Training & Backpropagation

Training involves adjusting weights using backpropagation. Errors from predictions are sent backward, optimizing accuracy. Gradient descent helps minimize errors, refining the model’s ability to recognize patterns and make reliable decisions.

Challenges of Neural Networks

Neural networks have revolutionized AI and machine learning, but they come with significant challenges that impact their development and deployment. Here are five key challenges:

High Computational Cost

Training deep neural networks requires significant processing power, often needing GPUs or TPUs. This increases costs and limits accessibility for smaller businesses or researchers with limited resources.

Large Data Requirements

Neural networks need vast amounts of labeled data for accurate training. Obtaining high-quality datasets can be time-consuming, expensive, or even impractical for certain applications.

Black Box Nature

Understanding how a neural network makes decisions is difficult. The lack of transparency can be problematic in sensitive fields like healthcare and finance, where explainability is crucial.

Overfitting Issues

Neural networks can memorize training data instead of generalizing patterns, leading to poor performance on new, unseen data. Regularization techniques and proper validation are necessary to mitigate this.

Ethical & Bias Concerns

AI models can inherit biases present in training data, leading to unfair or discriminatory outcomes. Addressing bias requires careful data curation, ethical AI practices, and continuous monitoring.

Why are neural networks important?

Neural networks are important because they enable machines to learn from data, recognize patterns, and make intelligent decisions. They power applications like image recognition, speech processing, and predictive analytics, making AI more efficient and capable across various industries.

Their ability to handle complex, high-dimensional data makes them essential for automation, medical diagnoses, financial forecasting, and self-driving cars. By continuously improving accuracy through training, neural networks drive innovation in artificial intelligence, enhancing productivity and transforming technology-driven solutions.

Who uses neural networks?

Neural networks are used by a wide range of professionals and industries, including:

Data Scientists & AI Researchers

They develop and refine deep learning models for tasks like image recognition, natural language processing, and generative AI. Their work advances AI capabilities, optimizing everything from medical diagnoses to personalized recommendations in various industries.

Financial Analysts & Traders

Neural networks analyze vast datasets to detect fraud, predict market trends, and optimize trading strategies. AI-driven models improve risk management and automate investment decisions, enhancing efficiency and profitability in the financial sector.

Healthcare Professionals

Deep learning aids in medical imaging, drug discovery, and disease prediction. AI-powered neural networks improve diagnostic accuracy, detect anomalies in X-rays and MRIs, and assist in early-stage disease detection, revolutionizing modern healthcare.

Autonomous Vehicle Engineers

Neural networks process sensor data to enable real-time decision-making in self-driving cars. They help detect objects, recognize road signs, and predict pedestrian behavior, making autonomous transportation safer and more reliable.

Cybersecurity Experts

AI-powered neural networks enhance threat detection by identifying anomalies in network traffic. They help prevent cyberattacks, detect fraud, and improve real-time security monitoring, making digital environments more secure.

Practical Applications of Neural Networks

Neural networks, a fascinating aspect of artificial intelligence, have found their way into various domains, revolutionizing the way we approach problems and solutions. Let’s dive into the diverse applications of neural networks, highlighting their adaptability and potential future trends.

Image Recognition: One of the most striking uses of neural networks is image recognition. They can identify patterns and objects in images with remarkable accuracy. From unlocking your phone with facial recognition to diagnosing diseases from medical images, neural networks are making significant contributions.

Natural Language Processing (NLP): Neural networks are also at the forefront of understanding and processing human language. Whether it’s translating languages, powering voice assistants like Siri or Alexa, or enabling chatbots for customer service, NLP relies heavily on neural networks.

Finance: In the financial sector, neural networks are used for predictive analysis, such as forecasting stock prices or identifying fraudulent activities. Their ability to analyze vast amounts of data helps in making informed decisions.

Healthcare: The healthcare industry benefits immensely from neural networks. They assist in early disease detection, drug discovery, and personalized medicine, contributing to more efficient and effective patient care.

Future of Neural Networks in AI

As neural network research advances, several themes are becoming apparent:

Explainable AI (XAI): Transparency in AI models is becoming more and more popular. Scientists are developing techniques to improve the interpretability of neural networks’ decision-making.

Transfer Learning: This method makes it simpler for smaller businesses to use potent AI technologies by enabling pre-trained models on big datasets to be optimized for certain tasks with less data.

Neural Architecture Search (NAS): By automating neural network architecture design, more effective models that are customized for certain tasks can be produced without requiring a lot of manual tuning.

Integration with Edge Computing: As the number of IoT devices increases, the use of lightweight neural networks that can operate on edge devices will improve the ability to make decisions in real time without significantly depending on cloud computing resources.

Continual Learning Systems: Researching into creating systems that can continually learn from fresh data without losing track of previously learned information is ongoing and has important ramifications for AI applications in a variety of fields.

Conclusion

In summary, we explored neural networks and their role in technology. These intelligent systems function like the human brain, learning from data and making decisions. We discussed their structure, learning process, and impact on various industries.

Neural networks are transforming problem-solving in fields like image recognition, language processing, finance, and healthcare. Their importance continues to grow, and in the future, they will drive even more groundbreaking advancements.

FAQs

What is a neural network?

Inspired by the human brain, a neural network is a machine learning model made up of interconnected nodes, or neurons, that analyze data to identify trends and provide predictions.

How do neural networks learn?

Neural networks learn through a training procedure that reduces prediction errors, neural networks learn by modifying the weights of connections between neurons. This technique is frequently known as backpropagation.

What are the main components of a neural network?

The main components of a neural network include – the input layer, where data is fed in, one or more hidden layers, where processing takes place, and the output layer, which generates the result, are the three primary parts of a neural network.

What are common applications of neural networks?

Neural networks are extensively employed in many different industries for applications like speech recognition, image recognition, natural language processing, and predictive modeling.

Abhishek Sharma

Website Developer and SEO Specialist

Abhishek Sharma is a skilled Website Developer, UI Developer, and SEO Specialist, proficient in managing, designing, and developing websites. He excels in creating visually appealing, user-friendly interfaces while optimizing websites for superior search engine performance and online visibility.