Large Language Models (LLMs), advanced artificial intelligence (AI) systems trained on vast amounts of text data, are revolutionizing industries with their ability to understand and generate human-like text using natural language processing (NLP) technologies such as Generative Pre-trained Transformers (GPTs). One of the most notable LLMs, GPT-3 by OpenAI, contains 175 billion parameters, making it one of the most powerful models available today. According to Market Research Future, the global AI market is projected to reach $733.7 billion by 2027, with LLMs playing a pivotal role in this growth.

Business intelligence (BI) techniques can be significantly enhanced by LLMs like GPT-3, which have shown immense potential in driving predictive analytics by processing large datasets to forecast future trends. Additionally, LLMs improve natural language understanding, facilitating more intuitive and user-friendly BI tools. As a result, businesses can leverage LLMs to maximize BI, uncover valuable insights, and improve decision-making processes. Gartner predicts that by 2025, over 50% of business analytics queries will be generated via search, natural language processing, or voice, highlighting the growing influence of LLMs in BI.

In this blog, we explore how businesses can harness the power of LLMs to transform their BI strategies and unlock new opportunities for growth and efficiency.

Understanding Large Language Models

Large Language Models (LLMs) are at the forefront of artificial intelligence advancements, transforming the way we process and interact with text data. These models are trained on vast amounts of text data, enabling them to understand, generate, and respond to human language with remarkable accuracy. Among the most notable LLMs is OpenAI’s GPT-3, which boasts 175 billion parameters, making it one of the largest and most powerful language models to date.

Key Characteristics of LLMs:

Extensive Training Data: LLMs are trained on diverse datasets that include books, articles, websites, and other forms of written content. This extensive training allows them to comprehend complex language patterns, contexts, and nuances.

Natural Language Processing (NLP): Utilizing advanced NLP technologies, LLMs can perform a wide range of tasks such as text generation, translation, summarization, and sentiment analysis. This makes them versatile tools for various applications.

Human-like Text Generation: The sophisticated architecture of LLMs enables them to produce text that closely mimics human writing. This capability is valuable for content creation, automated customer service, and conversational AI.

Adaptability: LLMs can be fine-tuned for specific industries and applications, making them adaptable to diverse fields such as healthcare, finance, and education.

Examples of Large Language Models

Large Language Models (LLMs) represent a significant leap forward in the field of artificial intelligence, particularly in natural language processing (NLP). These models are designed to understand and generate human-like text by leveraging vast amounts of data and sophisticated algorithms. Here, we explore some of the most prominent LLMs, detailing their capabilities, architectures, and applications.

GPT-3

OpenAI’s model with 175 billion parameters, excels in tasks such as translation, summarization, and code generation. It impacts business intelligence, healthcare, and creative industries by providing versatile and accurate natural language processing capabilities.

BERT

Google’s model with 340 million parameters, understands context in sentences for tasks like question answering and sentiment analysis. It enhances search engines, customer service chatbots, and machine translation accuracy, making it pivotal in improving user interaction and information retrieval systems.

T5

Google’s model with up to 11 billion parameters, transforms all NLP tasks into a text-to-text format. Used extensively for content generation, academic research, and business analytics, T5 provides highly accurate summaries, translations, and insights from large datasets.

XLNet

Developed by Google and CMU, XLNet employs a permutation-based training method with 340 million parameters. It outperforms BERT in understanding context by considering word permutations, making it valuable in market analysis, legal research, and healthcare applications.

RoBERTa

Developed by Facebook AI, RoBERTa has 110-340 million parameters and is trained on diverse datasets. It enhances targeted advertising effectiveness, supports personalized education systems, and improves financial analysis through advanced natural language understanding capabilities.

LLM use case in the Healthcare Industry

Large Language Models (LLMs) offer substantial benefits and applications within the healthcare industry, utilizing their advanced natural language processing capabilities to enhance various aspects of patient care, medical research, and operational efficiency.

Medical Documentation and Summarization: LLMs can analyze and summarize large volumes of medical records, research papers, and patient notes efficiently. This capability aids healthcare professionals in quickly accessing critical information, making informed decisions, and improving patient care.

Clinical Decision Support: By processing and interpreting medical literature and patient data, LLMs assist clinicians in making evidence-based decisions. They can provide insights into treatment options, potential drug interactions, and personalized care pathways, thereby improving diagnostic accuracy and treatment outcomes.

Patient Interaction and Education: LLM-powered chatbots and virtual assistants can engage with patients in natural language, answering queries about symptoms, treatments, and healthcare guidelines. These virtual assistants can also provide personalized health education, promoting patient empowerment and adherence to medical advice.

Drug Discovery and Development: LLMs contribute to accelerating the drug discovery process by analysing vast amounts of biomedical literature and genomic data. They can identify potential drug candidates, predict molecular interactions, and facilitate the design of novel therapies, leading to advancements in personalized medicine.

Healthcare Administration and Efficiency: LLMs streamline administrative tasks such as medical coding, billing, and scheduling by automating repetitive processes. This efficiency improvement allows healthcare providers to focus more on patient care and reduces operational costs.

Public Health and Epidemiology: LLMs can analyse health-related data from various sources, including social media, news articles, and medical journals, to detect disease outbreaks, monitor public sentiment, and support epidemiological research. This capability is crucial for early warning systems and public health interventions.

Ethical and Legal Compliance: LLMs assist healthcare organizations in maintaining compliance with regulatory requirements and ethical standards by analysing and interpreting healthcare policies, guidelines, and legal documents.

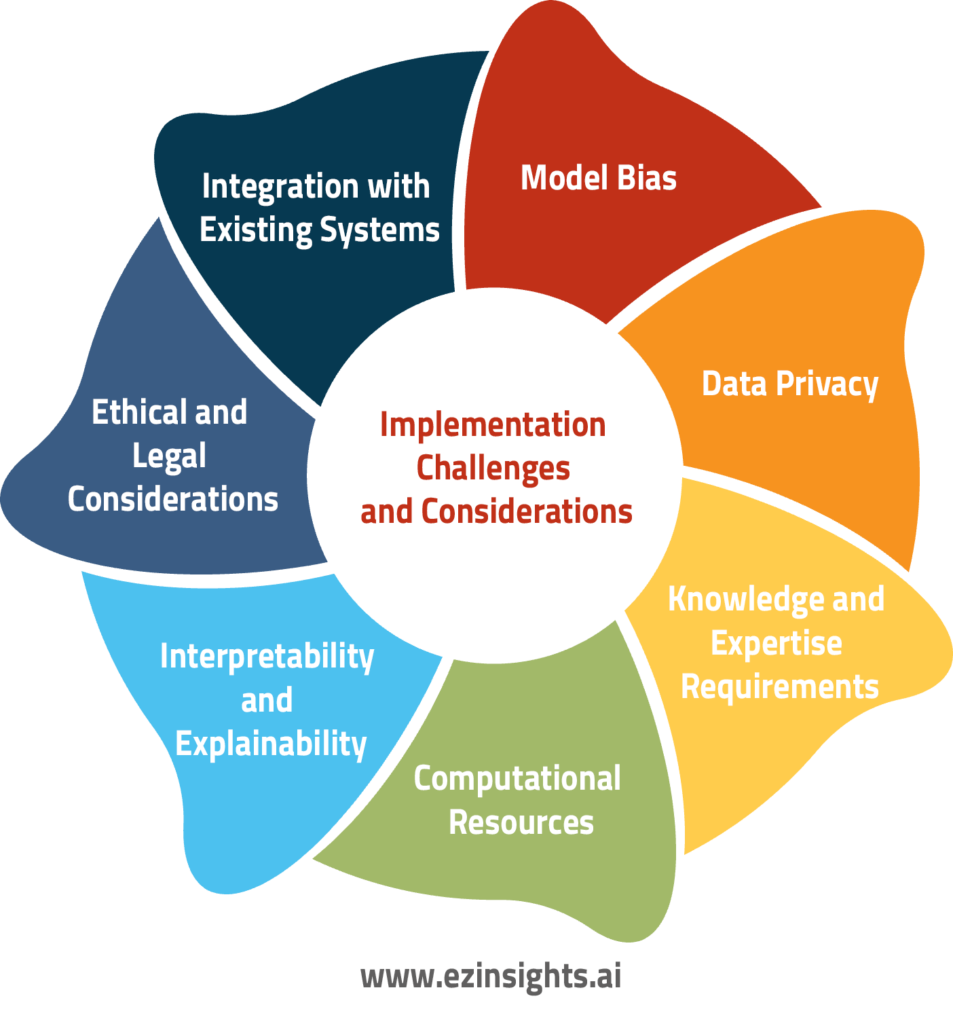

Implementation Challenges and Considerations

Large Language Models (LLMs) like GPT-3 present a few implementation-related issues and concerns that demand careful thought. These include:

Model Bias:

Biases found in the training set may be inherited by LLMs. These prejudices may produce unfair or discriminatory results, particularly in delicate contexts such as employment, loan approval, or content moderation. It is necessary to carefully pre-process data, diversify training datasets, and continuously check model outputs to address and mitigate these biases.

Data Privacy:

For LLMs to train efficiently, a lot of data is frequently needed. Data privacy is called into question by this, particularly when handling private or sensitive data. To secure user data, businesses must follow data protection laws (such as the CCPA in California and the GDPR in Europe) and put strong data anonymization and encryption procedures in place.

Knowledge and Expertise Requirements:

Effective LLM implementation necessitates knowledge of AI and Natural Language Processing (NLP). Companies want knowledgeable experts that can adjust models, decipher data, and incorporate new information into procedures that already exist. For smaller companies without access to such knowledge, this could be a hurdle.

Computational Resources:

The deployment and training of LLMs can need a lot of computing power. To support real-time inference and model training, businesses must invest in sufficient hardware infrastructure (such as GPUs and cloud computing resources), which can be expensive.

Interpretability and Explainability:

Since LLMs are frequently referred to as “black box” models, it might be difficult to comprehend how they make choices. This lack of openness may be problematic in regulated industries (financial, healthcare, etc.) where choices must be explicable. While they may not completely fix the problem, strategies like attention mechanisms and model distillation can aid in improving interpretability.

Ethical and Legal Considerations:

The ethical implications of AI’s effects on society, employment displacement, and responsible technology use are brought up by the deployment of LLMs. Companies must take these things into account when creating rules that support moral AI activities and comply with the law.

Integration with Existing Systems:

It might be difficult to integrate LLMs into current company procedures and IT infrastructure. To avoid compatibility problems, scalability issues, and the requirement for bespoke solutions, rigorous planning and cooperation between AI specialists and domain experts are required.

Conclusion

In conclusion, organizations still need to deal with issues like model bias, data privacy problems, and the requirement for specialist AI and NLP skills, even with the advantages of Large Language Models (LLMs) for automation and efficiency. It is imperative to mitigate bias by carefully preparing the data and to take strict steps to preserve privacy and adhere to legislation. The intricacy of execution and upkeep emphasizes how crucial strategic planning and investment are. Adhering to ethical principles guarantees conscientious implementation, augmenting credibility and optimizing LLMs’ capabilities in digital revolution.

FAQs

How can business intelligence be improved by LLMs?

Large Language Models provide sophisticated sentiment analysis, trend forecasting, and insights into consumer behaviour by analysing enormous volumes of textual data. They produce tailored content, automate data processing jobs, and enhance decision-making processes through advanced natural language understanding, thus vastly improving business intelligence.

What are the main obstacles that companies must overcome to use LLMs for business intelligence?

Enterprises frequently face obstacles like mitigating model bias in data, guaranteeing adherence to data protection regulations, and obtaining specialist knowledge in AI and NLP. Other major challenges include understanding complex model outputs, managing computing resources, and integrating LLMs with current IT infrastructure.

What steps may companies take to reduce the dangers involved with employing LLMs for business intelligence?

Risk mitigation entails putting strong data protection mechanisms in place, rigorously preparing data to reduce bias, and complying with legal frameworks such as GDPR. It is possible to guarantee responsible use and increase stakeholder trust in AI-driven business intelligence solutions by funding the training of AI specialists and creating explicit ethical rules for LLM deployment.

Anupama Desai

President & CEO

Anupama has more than 23 years of experience as business leader and as an advocate for improving the life of the business users. Anupama has been very active in bringing business perspective in the technology enabled world. Her passion is to leverage information and data insights for better business performance by empowering people within the organization. Currently, Anupama leads Winnovation to build world class Business Intelligence application platform and her aim is to provide data insights to each and every person within an organization at lowest possible cost.