Generative AI is transforming industries by enabling machines to create text, images, music, and code with human-like abilities. However, its adoption presents challenges, especially in data privacy, security, and computational efficiency. Federated Learning (FL) offers a solution by training AI models in a decentralized way while keeping data private. Integrating Enhanced Federated Learning with Generative AI can lead to more efficient, secure, and collaborative AI development.

Understanding Generative AI

Generative AI refers to the subset of artificial intelligence that focuses on creating new data samples that mimic existing data distributions. Applications range from generating realistic images and videos to producing human-like text and synthesizing medical data for research purposes. Prominent examples include OpenAI’s GPT series and DALL-E, which have shown how generative models can push the boundaries of creativity and utility.

Despite its potential, generative AI faces significant hurdles, particularly in accessing high-quality and diverse datasets without compromising user privacy.

Federated Learning: A Privacy-Preserving Approach

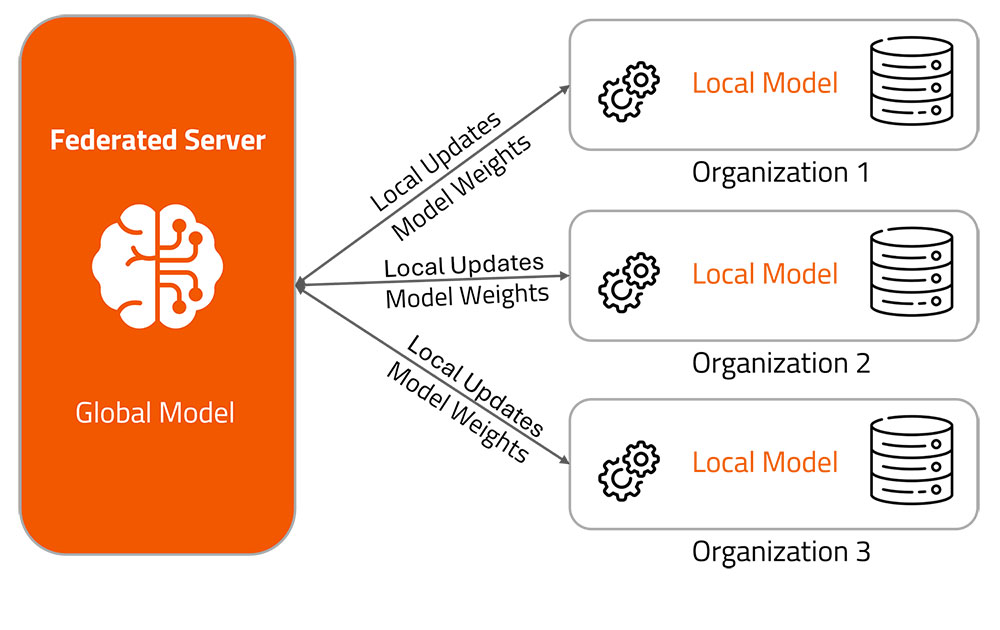

Federated learning is a decentralized method of machine learning which allows several people to work together to build a model without disclosing their raw data. In industries like healthcare or banking, where data protection is crucial, this approach is especially beneficial. Federated learning enables enterprises to take use of collective intelligence while drastically lowering the risk of data breaches by limiting the distribution of model updates and keeping the data localized on devices.

Enhancing Federated Learning for Generative AI

While traditional Federated Learning enhances privacy, Enhanced Federated Learning incorporates additional techniques to improve performance and scalability for Generative AI.

Differential Privacy and Secure Aggregation

Differential Privacy ensures that shared updates do not expose sensitive information about individual data points. By adding noise to the model updates, it prevents adversaries from reconstructing private data. Secure Aggregation further enhances privacy by encrypting model updates before sharing them, preventing malicious actors from accessing private data. This dual-layered security mechanism ensures that privacy remains intact even when collaborating across multiple devices.

Adaptive Model Compression

Generative AI models require substantial computational resources, making FL implementations challenging due to high bandwidth usage. Adaptive Model Compression techniques such as quantization and pruning reduce the size of transmitted model updates. This optimizes network efficiency, allowing decentralized training without overwhelming communication networks while maintaining model performance.

Personalization and On-Device Fine-Tuning

Generative AI models benefit from on-device fine-tuning to deliver personalized experiences. Rather than relying on centralized training, FL allows models to adapt locally based on user preferences. This approach is particularly useful in applications like personalized virtual assistants, medical diagnostics, and customized content generation, where context-awareness plays a crucial role in improving AI responses.

Blockchain Integration for Security

Blockchain technology strengthens the security framework of Federated Learning by ensuring transparency and preventing data tampering. Using blockchain to secure model update validation fosters trust among participating devices, reducing risks of adversarial attacks and unauthorized modifications. This decentralized ledger approach enhances the credibility of model training in sensitive applications such as finance and healthcare.

The Challenges of Standard Federated Learning in Generative AI

Despite its advantages, standard Federated Learning faces several challenges when applied to Generative AI models.

High Computational and Communication Costs

Generative AI models require significant processing power due to their complexity, leading to high computational costs. Additionally, frequent updates and large model sizes increase network bandwidth usage, making Federated Learning implementations expensive and inefficient for real-world applications.

Data Heterogeneity and Non-IID Data

Data collected from decentralized devices is often highly diverse and non-independent, leading to inconsistencies in model training. Since Generative AI models rely on structured learning patterns, variations in data distributions can result in biases and unstable performance across different devices.

Model Convergence and Stability Issues

Training deep learning models in a decentralized environment presents convergence challenges. Since updates occur asynchronously across multiple devices, inconsistencies in training data and model updates may lead to slow convergence, affecting the overall stability and quality of Generative AI outputs.

Security and Privacy Risks

Although Federated Learning enhances data privacy, risks such as model inversion attacks and adversarial manipulation remain. Malicious actors could introduce biased updates or extract sensitive information from shared model parameters, posing a potential security threat to Generative AI implementations.

Limited Personalization Capabilities

Standard Federated Learning lacks efficient methods for on-device personalization, restricting Generative AI’s ability to generate context-aware outputs. Without adaptive learning strategies, the models may fail to deliver personalized responses, necessitating advanced techniques like Federated Meta-Learning to improve adaptability.

Types of Federated Learning

Federated learning includes different methods designed for specific needs in distributed machine learning. The core idea of decentralized data training remains the same, but how it is implemented can differ. Here are four main types of federated learning:

Centralized Federated Learning

This is the most common method, also known as server-based federated learning. A central server manages the entire training process. It sends a global model to client devices. These devices train the model locally and send updates back to the server. The server then combines the updates to improve the global model.

This approach is useful when a trusted central authority is needed, such as tech companies improving services across devices or healthcare organizations working together on research.

Decentralized Federated Learning

In this model, there is no central server. Clients communicate directly in a peer-to-peer network. Each client acts as both a learner and an aggregator, sharing models or updates with others. Blockchain technology often manages these interactions. The global model comes from the combined work of all clients.

This method is ideal when there is no trusted central authority. It also improves privacy and reduces risks from single points of failure.

Heterogeneous Federated Learning

Heterogeneous federated learning handles the challenges of training models across devices and data with different characteristics. It uses adaptive algorithms to manage differences in processing power, data quality, and quantity across clients.

This method works well in situations where diversity is common, such as IoT networks or when many organizations collaborate.

Cross-Silo Federated Learning

Cross-silo federated learning focuses on collaboration between organizations or data silos. It involves a few stable participants, like companies or institutions, with access to large datasets and strong infrastructure. Legal agreements often govern data sharing and model ownership.

This method is effective for joint research or fraud detection between organizations. It lets organizations share insights without compromising sensitive data.

Key Features of Federated Learning

Decentralized Training: AI models train across many devices, keeping data local. This reduces security risks and boosts privacy for users and organizations.

Data Privacy: Sensitive data stays on local devices. This minimizes exposure risks and cuts down on regulatory challenges. It also ensures user confidentiality while still allowing effective model training.

Efficient Model Aggregation: Only trained model updates are shared, not raw data. This saves bandwidth and reduces the strain on computational resources across devices.

Adaptive Learning: AI models change based on real-time user interactions. This provides more personalized and context-aware results, without the need for centralized data collection.

Scalability: Federated learning allows for easy expansion across many devices. It supports AI model deployment in large-scale applications like smart devices, IoT, and enterprise solutions.

Security Enhancements: Techniques like differential privacy, secure aggregation, and blockchain encryption protect data security. These methods guard against cyber threats and ensure integrity in federated AI training.

Applications of Federated Learning

Federated learning has a wide range of applications in many industries. It enables secure, privacy-preserving collaboration while using decentralized data. Here are some key applications:

Healthcare: Federated learning helps hospitals and medical institutions collaborate without transferring sensitive patient data. It’s used to improve diagnostic tools, drug discovery, and personalized treatment plans.

Finance: In finance, federated learning supports fraud detection, credit risk assessment, and anti-money laundering. Banks and financial institutions can build strong models together without exposing sensitive customer data.

Retail and E-commerce: Retailers and e-commerce platforms use federated learning to create personalized recommendations and demand forecasting models. They can do this by analyzing customer behavior across decentralized systems while keeping user privacy intact.

Smart Devices and IoT: Federated learning help improve features in smart devices, like smartphones and IoT gadgets. It enhances predictive text, voice recognition, and anomaly detection without sending user data to centralized servers.

Autonomous Vehicles: Autonomous vehicles use federated learning to aggregate data from multiple vehicles. This improves object detection, route optimization, and decision-making, all while ensuring data privacy.

Education: Federated Learning allows educational institutions to improve adaptive learning systems and recommendation engines. They can collaborate on student performance data without compromising privacy.

Government and Public Policy: Government agencies can use federated learning for large-scale projects like urban planning, epidemic modeling, and disaster response. This approach securely combines data from various sources.

Agriculture: In agriculture, federated learning helps optimize crop yield predictions, pest detection, and resource management. It integrates data from different farms without exposing proprietary information.

The Future of Generative AI with Federated Learning

With ongoing advancements in federated optimization, decentralized model training, and secure AI frameworks, Generative AI is set to become more ethical and privacy aware. Organizations adopting Enhanced Federated Learning can drive AI innovation while ensuring data security, reduced latency, and regulatory compliance.

As AI continues to evolve, the fusion of Generative AI with Enhanced Federated Learning will play a pivotal role in shaping privacy-first, intelligent, and decentralized AI applications across industries.

Conclusion

Exploring generative AI via improved federated learning is a significant breakthrough in AI. Organizations may create strong models that protect user privacy and employ collective intelligence by resolving class inequality and utilizing decentralized data sources. The future landscape of AI applications across multiple sectors will surely be shaped by the integration of these techniques as technology continues to advance.

In conclusion, the cooperation between generative AI and federated learning stands out as an inventive option ready to propel major improvements in artificial intelligence as we continue to negotiate the challenges of data privacy and machine learning performance.

FAQs

What is federated learning, and how does it work?

Federated learning enables decentralized model training, where data stays local, and only model updates are shared for central aggregation.

How does generative AI contribute to addressing class imbalance in federated learning?

Generative AI produces synthetic data to balance class distributions, improving model performance by generating additional minority class samples.

What are the benefits of using vertical federated learning?

Vertical federated learning allows organizations with different features but the same samples to collaborate while keeping their data private.

What challenges do federate learning face, and how are they being addressed?

Challenges like data heterogeneity, communication costs, and security are tackled with secure multi-party computation and differential privacy techniques.

Abhishek Sharma

Website Developer and SEO Specialist

Abhishek Sharma is a skilled Website Developer, UI Developer, and SEO Specialist, proficient in managing, designing, and developing websites. He excels in creating visually appealing, user-friendly interfaces while optimizing websites for superior search engine performance and online visibility.