Large Language Models (LLMs) are no longer a futuristic concept. They’ve exploded onto the scene, transforming industries and redefining human-computer interaction. In 2025, developing LLM-powered products is more than just a trend – it’s a necessity for businesses looking to stay competitive.

This guide will delve into the key aspects of LLM product development in 2025, providing insights for both seasoned developers and those just starting their LLM journey.

Jump to:

Understanding Large Language Models

What Is LLM Product Development?

Understanding the LLM Product Development Lifecycle

Challenges in the traditional product development process

Building an LLM Product Strategy

Building an LLM Product Development Team

Real world Case Studies of Successful LLM Products

Examples of Large Language Models

Understanding Large Language Models

Large Language Models (LLMs) are advanced tools that analyse large amounts of text. They use deep learning and transformers to detect language patterns. Through a process called LLM grounding, these models ensure that the generated responses are relevant and accurate. Based on user input, they provide logical and contextually appropriate outputs. Their ability to mimic human language comes from training on diverse datasets. As a result, they offer outputs that match human communication more closely.

What Is LLM Product Development?

LLM Product Development focuses on creating systems powered by Large Language Models (LLMs). These models, like GPT, PaLM, and LLaMA, use vast datasets to understand and generate human-like text. Developers can customize LLMs for specific tasks. This flexibility makes them valuable for creating innovative products in many industries. LLM solutions, including Text-to-SQL, are key to advancing product development across various fields.

Understanding the LLM Product Development Lifecycle

The LLM product development lifecycle is a structured process for creating and improving applications powered by Large Language Models. Each stage helps ensure the product meets user’s needs while remaining scalable, secure, and efficient. Here’s a breakdown of the key phases:

Problem Identification and Requirements Gathering

The process begins by identifying the problem the LLM will solve. Clear requirements are gathered, including use cases, user needs, and desired outcomes. This sets a strong foundation for the project.

Model Selection and Customization

The team then selects the right LLM based on factors like domain relevance and budget. They may fine-tune the model to fit the application’s needs, improving its accuracy.

Set-up Infrastructure and Integration

Building a strong infrastructure is key. The team selects deployment methods like cloud services or APIs. They ensure seamless integration with existing systems for smooth operation and scalability.

Development and Prototyping

After setting up, developers create a prototype. This allows testing of key features, gathering feedback, and refining the solution.

Testing and Validation

The team runs comprehensive tests to ensure the LLM works as expected. They perform functional, performance, and security tests. They also check for biases and ensure compliance.

Deployment and Scaling

Once validated, the product is deployed. The team focuses on optimizing scalability to handle varying workloads. Continuous monitoring ensures performance and reliability.

Continuous Improvement and Maintenance

Even after deployment, the work continues. Regular updates and model retraining keep the product relevant. User feedback is used to make improvements over time.

Challenges in the traditional product development process

Traditional product development often faces a variety of challenges that can hinder efficiency, innovation, and overall success. Below are some of the key challenges encountered:

Lengthy Development Timelines

Traditional processes often follow a linear path, which can lead to delays in identifying and addressing issues. The sequential approach of design, development, testing, and deployment makes it harder to adapt to changes quickly, resulting in extended time-to-market.

Limited Flexibility

Rigid development methodologies make it difficult to pivot when requirements change, or new opportunities emerge. This lack of flexibility can result in products that no longer meet market needs by the time they are launched.

Poor Collaboration and Communication

Silos between teams, such as developers, designers, and business stakeholders, can lead to misaligned priorities and misunderstandings. Inconsistent communication often causes delays, rework, and inefficiencies in the overall process.

Insufficient User Involvement

Traditional approaches often limit user feedback to later stages of the process, such as after prototyping or during testing. This delayed input increases the risk of building products that fail to align with user needs or preferences.

High Costs and Resource Waste

Because traditional methods focus on fully completing each stage before moving forward, identifying errors late in the process can be costly. Reworking design or functionality after significant development has occurred wastes time, money, and resources.

Building an LLM Product Strategy

Define Your Value Proposition

Start by identifying the specific problem your LLM-powered product will solve for users. Focus on the unique capabilities that set your product apart from competitors, such as superior accuracy, specialized knowledge, or a user-friendly interface. Think about the benefits your users will gain, like increased efficiency, reduced costs, enhanced creativity, or better decision-making. A clear value proposition will help attract and retain users.

Choose the Right LLM Foundation

Select a model that fits your product’s needs. You can choose from pre-trained models like GPT-4, Bard, or Llama 2, considering factors like performance, cost, and ease of use. For specific industries, explore specialized models, such as those designed for medical research or financial analysis. If you want more customization and control, open-source models like BLOOM or Stable Diffusion are great options but be prepared for the resources and expertise required to manage them.

Data Strategy

High-quality data is essential for building an effective LLM product. Ensure your training or fine-tuning data is diverse, relevant, and reliable. At the same time, prioritize data privacy and security by implementing strong protocols for handling, storing, and using data. This not only ensures compliance with regulations but also builds user trust.

User Experience (UX) Design

Design your product with the user in mind. Create intuitive interfaces that make interacting with the LLM simple and enjoyable, whether through conversational UIs or easy-to-use text editors. Don’t forget about inclusivity—ensure your product is accessible to people with disabilities, allowing everyone to benefit from its capabilities.

Development and Deployment

Build a solid infrastructure that can handle high usage while maintaining quick response times and consistent availability. Make sure the system can scale as demand grows. To keep improving, set up ways to monitor performance, gather user feedback, and make updates regularly. This ensures your product stays effective and meets user needs over time.

Ethical Considerations

Address ethical concerns throughout the development process. Actively work to reduce biases in the LLM and its training data to promote fairness. Be transparent about how the model works and why it generates specific outputs to build user trust. Lastly, always consider the broader impact of your product, ensuring responsible innovation that aligns with ethical standards.

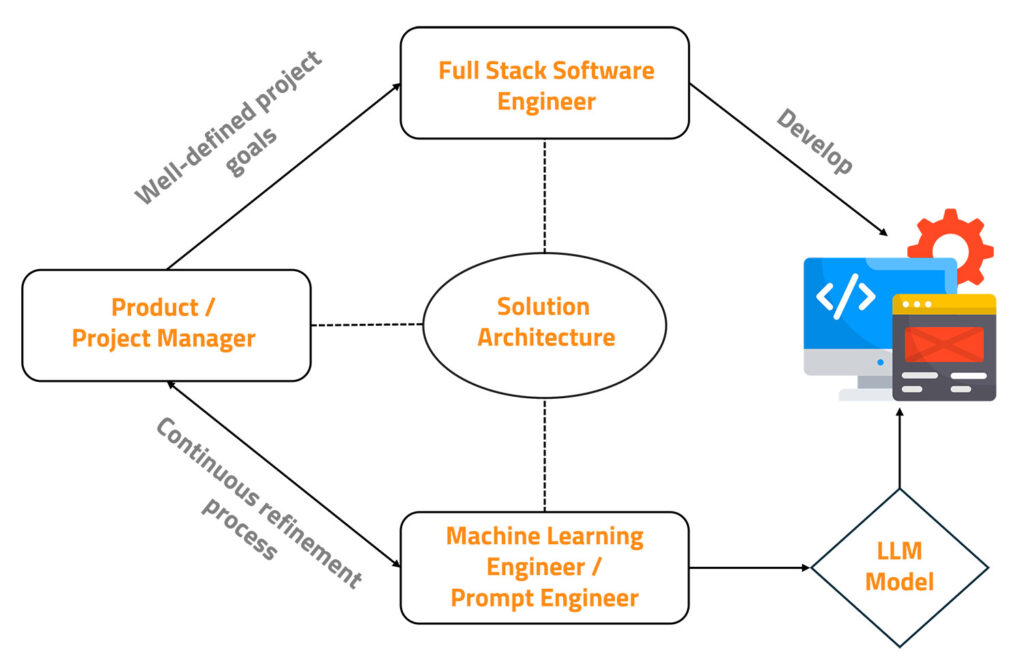

Building an LLM Product Development Team

Product Manager: The Product Manager defines the vision and strategy, aligning technical teams with business objectives. They prioritize features, coordinate with stakeholders, and ensure the timely delivery of a high-quality product.

Machine Learning Engineers: ML Engineers design, implement, and optimize large language models. They focus on model training, fine-tuning, deployment, and performance improvements, leveraging cutting-edge machine learning techniques to enhance product functionality.

Data Engineers: Data Engineers manage data pipelines, ensuring seamless data collection and processing for LLM training. They ensure data availability, cleanliness, and quality to enable accurate and effective model performance.

AI/ML Research Scientists: AI/ML Research Scientists push the boundaries of LLM technology by exploring innovative techniques and algorithms. They stay ahead of industry trends, publishing research, and advancing the development of more sophisticated models.

Backend Engineers: Backend Engineers build and optimize APIs and services that integrate with LLM models. They focus on creating scalable and efficient systems that handle the product’s backend processing and data interactions.

Frontend Engineers: Frontend Engineers create engaging user interfaces for LLM-based products. They ensure users interact with the model through intuitive, responsive, and visually appealing web or mobile applications, enhancing the user experience.

DevOps Engineers: DevOps Engineers manage deployment, scaling, and monitoring of LLM products. They automate workflows, maintain cloud infrastructure, and ensure continuous integration and delivery to provide reliable, high-performing services.

Quality Assurance Engineers: QA Engineers ensure the quality of the LLM product by conducting thorough testing. They implement test automation strategies, verify model accuracy, and identify issues to guarantee smooth functionality and performance.

Real world Case Studies of Successful LLM Products

The following real-world case studies highlight how leading companies harness the power of LLMs to revolutionize industries.

Zoom – Smart Meeting Summaries

Zoom leverages LLMs to provide real-time meeting transcriptions and smart summaries. This feature enhances collaboration by capturing key points and follow-up actions for attendees.

Adobe – Sensei for Content Automation

Adobe Sensei employs LLMs for automating content tagging, image recommendations, and natural language generation in creative workflows, enhancing productivity for designers and marketers.

DeepMind – Alpha Code for Programming

DeepMind’s Alpha Code uses LLMs to solve complex coding problems. It assists in generating efficient solutions for competitive programming and enterprise-level software challenges.

Spotify – Personalized Playlists

Spotify uses LLMs to analyse user preferences and generate personalized playlists. Natural language queries enable users to find music tailored to their mood, activity, or interests.

Coca-Cola – AI-Powered Marketing

Coca-Cola employs LLMs for personalized marketing campaigns, analysing customer sentiments and preferences. It generates customized ad copy and product recommendations, enhancing brand engagement and sales.

Netflix – Content Recommendations

Netflix leverages LLMs to enhance its recommendation engine. By analysing user reviews and feedback, it provides personalized content suggestions, improving user retention and engagement.

Examples of Large Language Models

In recent years, a few well-known LLMs have surfaced, each with special skills:

GPT Series:

Created by OpenAI, these models are popular in chatbots and content production because they are excellent at producing writing that looks human.

BERT:

This paradigm is perfect for search engines and question-answering systems since it emphasizes context understanding.

T5:

Well-known for its adaptability, T5 can handle a range of natural language processing tasks, such as summarization and translation.

These examples show how LLMs can be used in a variety of ways to improve product development procedures.

Exploring Open Source LLM Models

Businesses of all sizes can now more easily obtain cutting-edge AI technology with to the growth of open source LLM models. With these models, businesses may personalize their AI solutions without having to pay the hefty license costs that come with proprietary systems.

Hugging Face Transformers

Hugging Face offers a vast library of open-source LLMs, including BERT, GPT-2, and RoBERTa. Developers can easily fine-tune these models for custom applications.

Meta’s LLaMA

Meta’s LLaMA is designed for efficiency and accessibility in research. Its lightweight architecture makes it ideal for academic exploration and smaller-scale enterprise applications.

BigScience BLOOM

BLOOM is an open-source multilingual LLM supporting over 46 languages. It focuses on inclusivity and accessibility, enabling global applications across diverse linguistic and cultural contexts.

EleutherAI GPT-NeoX

GPT-NeoX, developed by EleutherAI, is a robust alternative to proprietary LLMs. It’s widely adopted for research and industry, supporting large-scale, customizable AI-driven solutions.

Cohere AI

Cohere provides open-source models optimized for natural language processing tasks like classification and search. Its API simplifies integration into business workflows for language understanding and generation.

Open Assistant

Open Assistant is a community-driven project creating accessible LLMs for conversational AI. It emphasizes user control, ethical AI usage, and adaptability to various industries.

Conclusion

Developing LLM-powered products in 2025 is an exciting but challenging endeavour. By carefully considering the factors outlined in this guide, you can increase your chances of success and build innovative, impactful, and responsible LLM applications.

Explore the possibilities today register for a demo on EzInsights AI and unlock the potential of LLM technology!

FAQs

What are Large Language Models (LLMs) and how do they impact product development?

LLMs are AI systems trained on large datasets to understand and generate human-like text, aiding product development by speeding up ideation, analysis, and market responsiveness.

How can LLMs enhance the product development lifecycle?

LLMs streamline product lifecycle stages by generating ideas, offering templates, analyzing performance data, and enabling faster iterations aligned with customer needs and industry trends.

What are the challenges of implementing LLMs in product development?

Challenges include fine-tuning for quality, balancing performance with computing costs, and ensuring ethical practices. Constant monitoring and refinement are essential for reliable results.

What best practices should companies follow when integrating LLMs into their product strategies?

Companies should target specific use cases, prioritize ethical data practices, involve cross-functional teams, and continuously refine LLM models for effective and responsible integration.

Abhishek Sharma

Website Developer and SEO Specialist

Abhishek Sharma is a skilled Website Developer, UI Developer, and SEO Specialist, proficient in managing, designing, and developing websites. He excels in creating visually appealing, user-friendly interfaces while optimizing websites for superior search engine performance and online visibility.